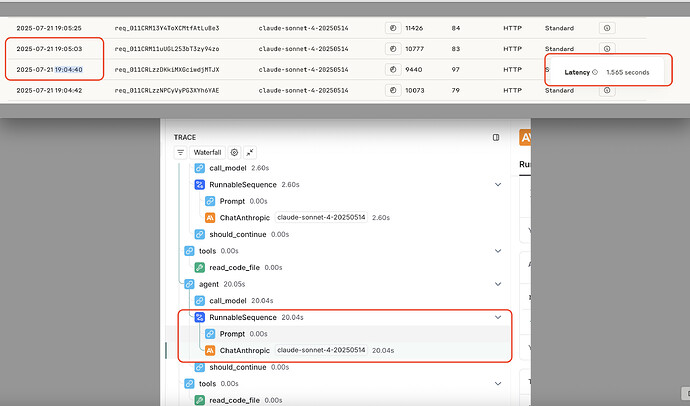

I have a simple react implementation using the create_react_agent method. I am seeing that when checking traces in LangSmith, the LLM calls latency is being reported way higher in LangSmith than in the LLM logs. See example below:

The same LLM call is reported as 20.04 seconds in Langsmith, though in the LLM logs it is reported as 1.565 seconds.

Do you know what can be adding the extra 18 seconds?

This is my implementation:

from langchain.chat_models import init_chat_model

from langgraph.prebuilt import create_react_agent

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnableConfig

from langchain_core.tools import tool

from typing import Annotated

from pydantic import BaseModel, Field

from .tools import rag_search_code, read_code_file, attempt_completion, list_files

from langchain_core.messages import AnyMessage

from langchain_core.runnables import RunnableConfig

from langgraph.prebuilt.chat_agent_executor import AgentState

# Model configuration

REACT_MODEL = "claude-sonnet-4-20250514"

# REACT_MODEL = "o3-mini"

# Initialize the language model

react_llm = init_chat_model(model=REACT_MODEL, temperature=0)

# Define the available tools

tools = [read_code_file, list_files, attempt_completion]

def prompt(state: AgentState, config: RunnableConfig) -> list[AnyMessage]:

project_folder_structure = config["configurable"].get(

"project_folder_structure", ""

)

# Define the React agent prompt

system_content = """Loren Ipsum

"""

user_content = f"""

Project Folder Structure:

{project_folder_structure}

"""

return [

{"role": "system", "content": system_content},

{"role": "user", "content": user_content},

] + state["messages"]

def create_react_agent_workflow():

"""

Create a React-style agent workflow for code analysis and assistance.

Args:

username: The username for project-specific operations

Returns:

A compiled LangGraph agent ready for execution

"""

# Create the React agent with the defined tools and prompt

agent = create_react_agent(model=react_llm, tools=tools, prompt=prompt)

return agent

async def run_react_agent_async(

query: str, username: str, project_folder_structure: str = "", config: dict = None

) -> dict:

"""

Run the React agent asynchronously with a given query.

Args:

query: The user's question or request

username: The username for project-specific operations

project_folder_structure: The project folder structure string

config: Additional configuration options

Returns:

The agent's response

"""

# Create the agent

agent = create_react_agent_workflow()

# Prepare the configuration

run_config = {

"configurable": {

"project_folder_structure": project_folder_structure,

"username": username,

**(config or {}),

}

}

# Execute the agent asynchronously

result = await agent.ainvoke(

{"messages": [{"role": "user", "content": query}]}, config=run_config

)

return result