Hi,

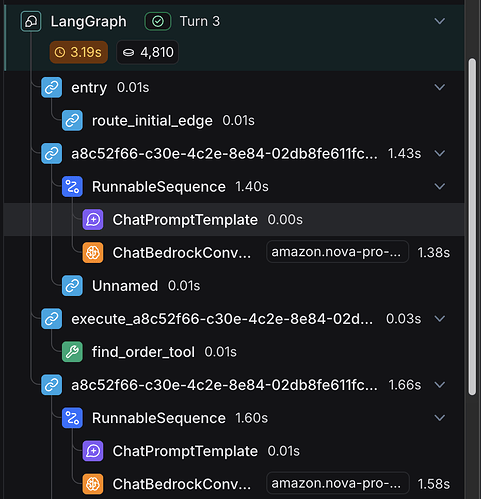

I am a building a voice virtual agent which is a simple react agent with tool calling. This python app is currently hosted on AWS and uses Nova Pro via AWS Bedrock as the language model. I consistently see 2 seconds of execution time whenever the ChatConverse is called. You can see two examples in the attached. I have tried OpenAI and 4o-mini but performance seems very similar. This seems slow and insufficient for voice experiences.

First call is to use language model to figure out which tool to call and second call is to conver tool output to a user response.

My question are:

- Is this performance expected?

- If no, what options do you explore to lower the latency.

Thanks!